Introduction

AI Supercomputer, It is a cutting-edge computing system designed to handle massive artificial intelligence workloads that ordinary computers cannot manage. Understanding AI Supercomputers helps reveal how these machines combine thousands of GPUs and TPUs with high-speed interconnects to process enormous datasets using parallel computing.

By exploring the AI Supercomputer, we see how it powers AI model training, runs complex deep learning algorithms, and accelerates innovation across science, healthcare, and industry.

What Is an AI Supercomputer?

An AI supercomputer is a highly specialized computing system engineered to handle extremely large-scale artificial intelligence tasks. Unlike conventional computers, these systems are built to process enormous datasets, train complex machine learning models, and execute AI algorithms with extraordinary speed and efficiency.

Core Architecture

AI supercomputers rely on thousands of GPUs (Graphics Processing Units) or TPUs (Tensor Processing Units) arranged into interconnected compute nodes. Each node functions as a high-performance cluster, and nodes are linked via ultra-fast network fabrics to allow seamless communication. Using parallel processing, workloads are distributed across multiple processors simultaneously, enabling the system to manage massive computations without bottlenecks.

Performance Capabilities

AI supercomputers operate at performance levels measured in petaflops (quadrillions of calculations per second) and exaflops (quintillions per second). They can process vast datasets at speeds far beyond traditional computers, making them millions of times more powerful than typical personal laptops. This immense capability allows for rapid experimentation, training, and deployment of advanced AI models.

Primary Purpose

These systems are designed to:

-

Train large-scale AI models, including natural language and computer vision models with billions of parameters.

-

Analyze and process massive datasets, often in the range of exabytes.

-

Execute complex deep learning algorithms that require high computational throughput.

-

Accelerate AI research and industrial applications, reducing the time required for model development and deployment.

Why AI Supercomputers Matter

By combining ultra-fast processors, parallel architectures, and enormous computational capacity, AI supercomputers enable breakthroughs that are impossible on standard machines. They power innovations in fields like healthcare, climate modeling, autonomous systems, and financial forecasting, supporting AI development at a global scale.

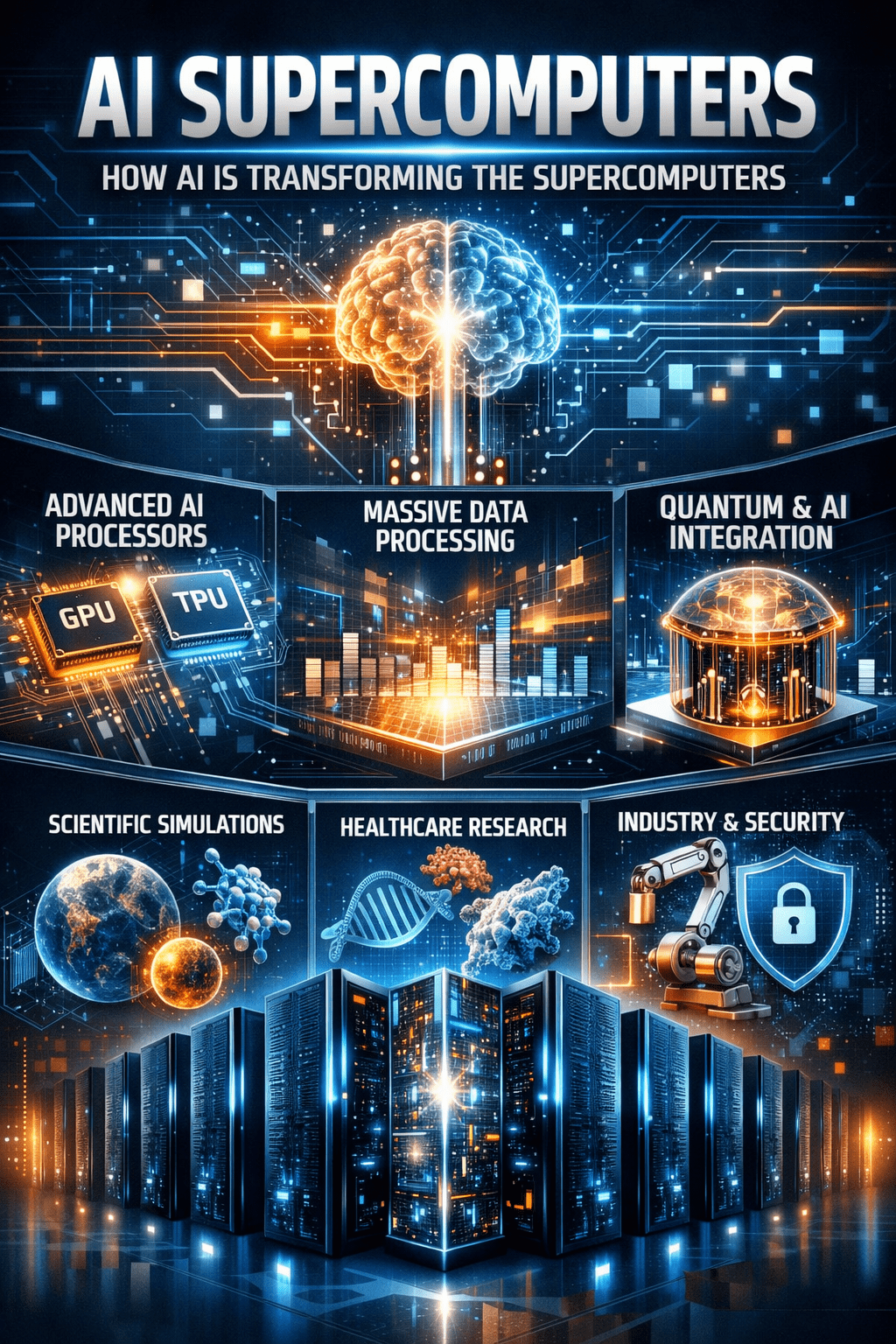

How AI is Transforming Supercomputers

Specialized Hardware Evolution

Modern supercomputers now rely on GPUs (Graphics Processing Units) and custom AI accelerators instead of standard CPUs. Systems like xAI’s Colossus employ over 200,000 AI chips, providing extraordinary computing density and accelerating machine learning and deep learning tasks. This shift allows supercomputers to handle both scientific simulations and industrial AI applications efficiently.

Performance Scaling

AI workloads have dramatically increased computational demands. Performance in AI supercomputers is doubling approximately every nine months, driven by specialized hardware and optimized architectures. Systems like Google’s Ironwood (TPU v7) are engineered for high-speed AI inference using lower-precision FP8 calculations, providing faster and more energy-efficient model training.

Self-Optimizing Architecture

AI integration is extending to the operating systems of supercomputers, enabling autonomous resource management, predictive hardware maintenance, and optimized energy consumption. This self-optimizing architecture ensures stable operation under heavy AI workloads and reduces downtime caused by errors or inefficiencies.

Advanced Cooling Systems

Modern AI accelerators consume up to 1,000 watts per GPU, generating extreme heat. Supercomputers are adopting direct-to-chip liquid cooling and closed-loop systems to maintain stability, enabling higher density computing without thermal limitations.

Hybrid Quantum-AI Integration

Emerging “quantum-accelerated supercomputing” combines AI with quantum simulation. Supercomputers now assist in simulating quantum algorithms, enabling breakthroughs in materials science, cryptography, and optimization problems previously considered intractable.

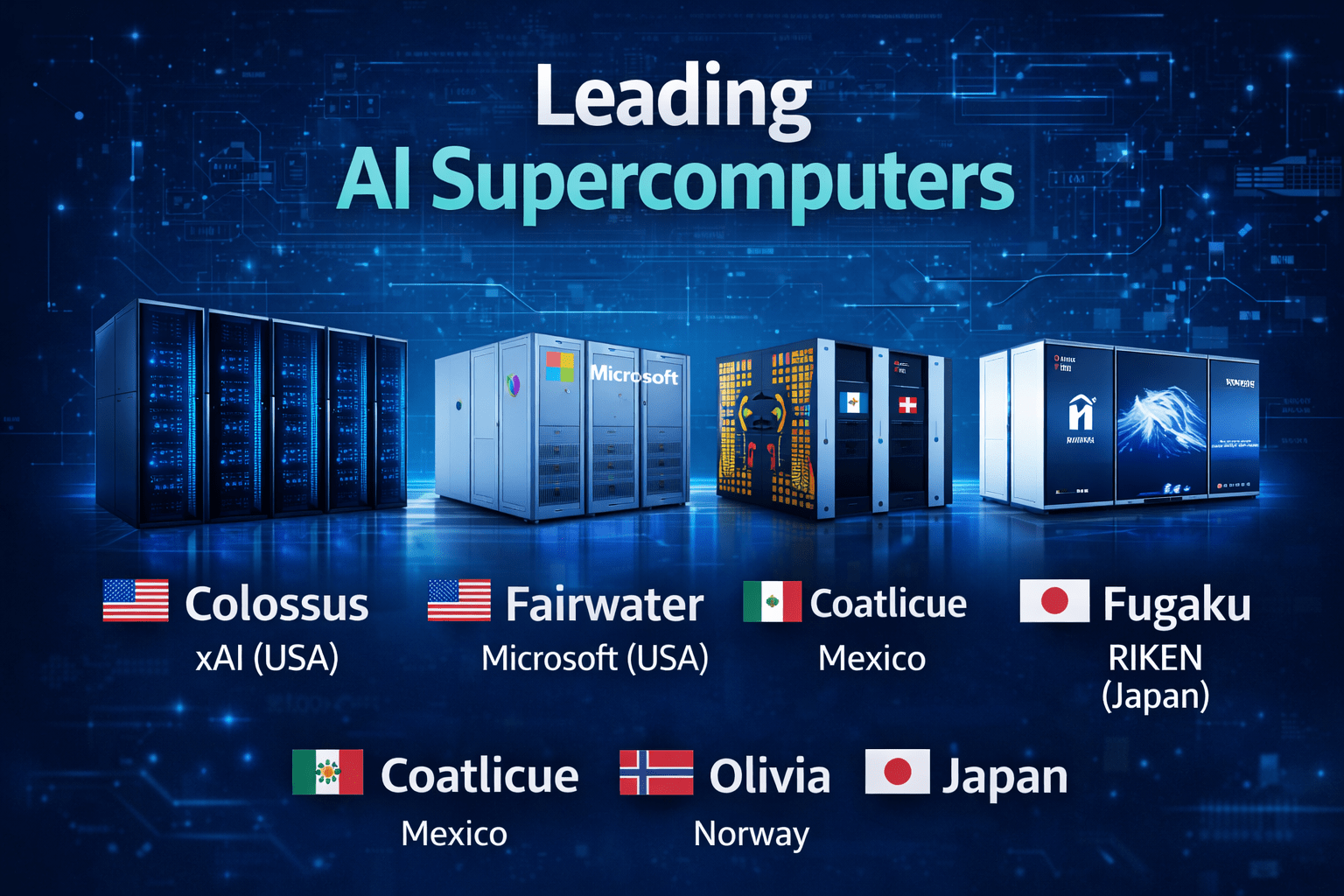

Leading AI Supercomputers

| System | Organization/Location | Key Feature |

|---|---|---|

| Colossus | xAI (USA) | 200,000 AI chips; top-ranked |

| Fairwater | Microsoft (USA) | 10x performance of conventional supercomputers |

| Coatlicue | Mexico | 14,480 GPUs for national AI initiatives |

| Olivia | Norway | Norway’s most powerful AI-ready supercomputer by HPE |

| Fugaku | RIKEN (Japan) | Long-standing leader now optimized for AI training |

These systems demonstrate the convergence of AI and HPC, showcasing high-speed computation, massive parallelism, and specialized architectures tailored for AI workloads.

Colossus — xAI (USA)

Colossus is a large-scale AI supercomputer developed by xAI in the United States. It uses around 200,000 AI chips, making it one of the most powerful systems for training advanced AI models. Its scale allows faster learning, complex simulations, and real-time AI processing at levels not possible with traditional systems.

Fairwater — Microsoft (USA)

Fairwater is Microsoft’s AI-focused supercomputing system designed to deliver performance far beyond conventional supercomputers. It achieves nearly ten times higher efficiency by combining AI-optimized hardware with advanced cloud infrastructure, supporting large language models and enterprise AI workloads.

Coatlicue — National AI Program (Mexico)

Coatlicue is Mexico’s flagship AI supercomputer built to support national research and public-sector innovation. Equipped with 14,480 GPUs, it enables large-scale data analysis, scientific research, and AI development, strengthening the country’s independent AI capabilities.

Olivia — Norway

Olivia is Norway’s most powerful AI-ready supercomputer, developed in collaboration with HPE. It is designed to support climate research, energy modeling, healthcare analysis, and industrial AI projects, helping Norway advance data-driven decision-making across sectors.

Fugaku — RIKEN (Japan)

Fugaku is a globally recognized supercomputer developed by RIKEN in Japan. Originally built for scientific computing, it has been optimized for AI training and deep learning workloads. Its balanced architecture supports both traditional simulations and modern AI applications at national scale.

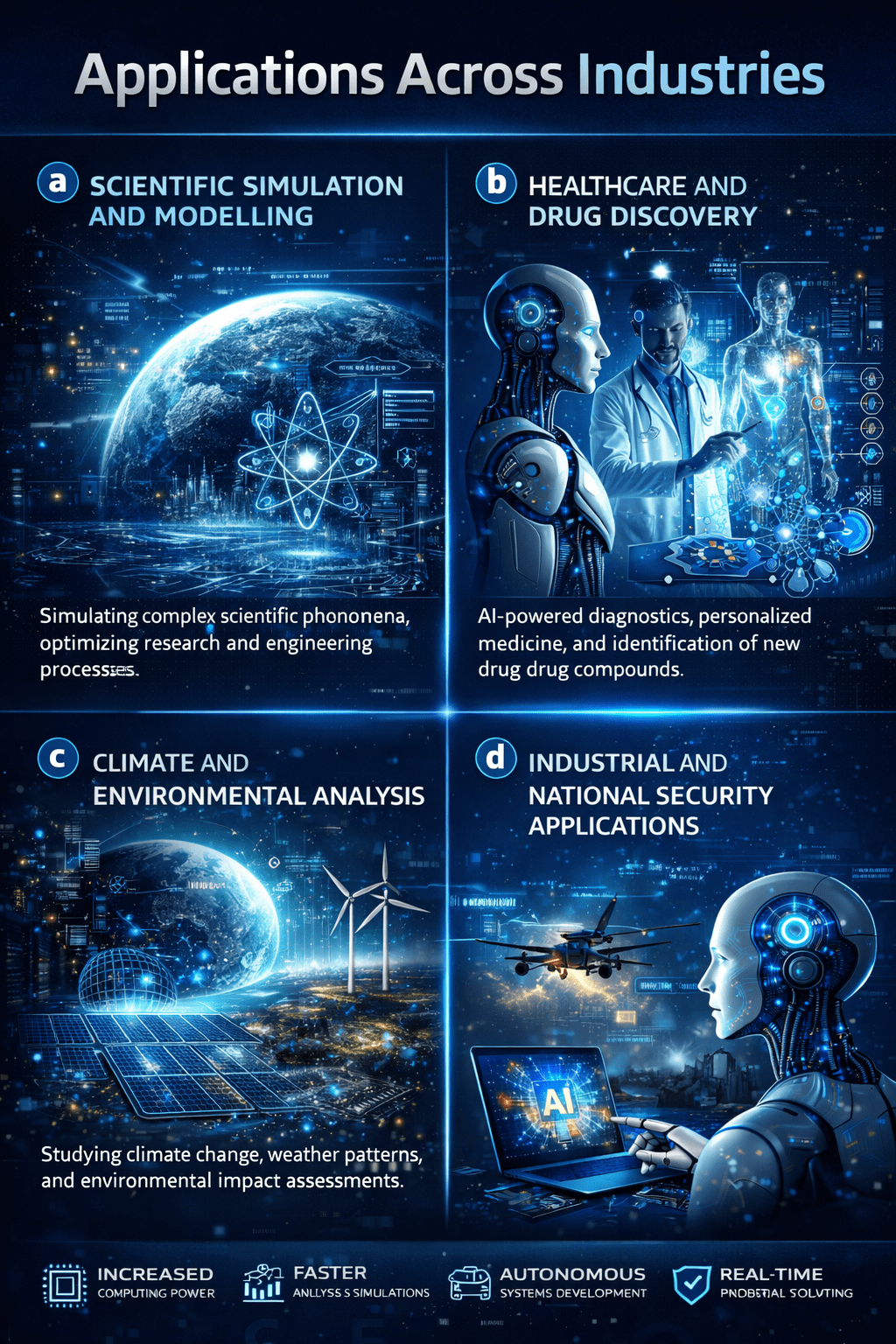

Applications Across Industries

Scientific Simulation and Modelling

AI supercomputers enable adaptive simulations in physics, chemistry, climate science, and genomics. Machine learning helps refine parameters, identify patterns, and accelerate discoveries, reducing simulation times from months to days.

Healthcare and Drug Discovery

AI-powered supercomputers process massive genomic and molecular datasets to accelerate drug discovery. They allow researchers to predict protein interactions, optimize treatments, and develop personalized medicine more efficiently.

Climate and Environmental Analysis

Weather forecasting and climate modelling benefit from AI-driven HPC. Supercomputers integrate satellite, sensor, and environmental data to predict storms, floods, and long-term climate shifts with higher accuracy.

Industrial and National Security Applications

From financial modelling to autonomous systems and cryptography, AI supercomputers enhance analytics, predictive modelling, and risk assessment. Governments and industries leverage these systems for real-time decision-making and secure operations.

Conclusion

Supercomputers are no longer just about speed. AI integration has transformed these systems into adaptive, intelligent engines capable of learning, optimizing, and accelerating both research and industrial applications. From healthcare and climate science to national security and advanced AI, supercomputers now redefine the limits of computation. This convergence marks a new era, where intelligence and processing power operate hand in hand, driving innovation across every domain.

Recommendation

For organizations aiming to leverage AI supercomputing, focus on workloads requiring large-scale simulation, predictive modeling, or deep learning. Use cloud-based HPC platforms or partnerships with national research facilities to access AI-accelerated hardware like GPUs and TPUs. Implement modular software that scales across parallel nodes and monitor resource efficiency with AI-driven management systems. Start with pilot projects to explore potential impact and gradually expand AI-supercomputing applications for practical, high-value results.

FAQs

Q1: How is AI changing supercomputers?

AI optimizes workloads, manages resources, and accelerates data-intensive simulations.

Q2: What industries benefit most from AI supercomputers?

Healthcare, climate science, finance, engineering, and national security.

Q3: Can smaller organizations access AI supercomputing?

Yes, cloud-based HPC and national partnerships provide scalable access.