Introduction

AI Infrastructure is the integrated environment of hardware and software designed specifically to support artificial intelligence and machine learning workloads. AI Infrastructure enables model training, inference, and governance at scale through high-throughput compute, low-latency networking, and large-scale data handling. Unlike traditional IT systems, AI infrastructure is built for parallel processing and constant data change.

AI Infrastructure now underpins everything from enterprise analytics to autonomous systems. As adoption expands across the UK and USA, AI infrastructure has shifted from a technical concern to a core business capability. Performance, cost control, compliance, and trust all depend on infrastructure decisions made early.

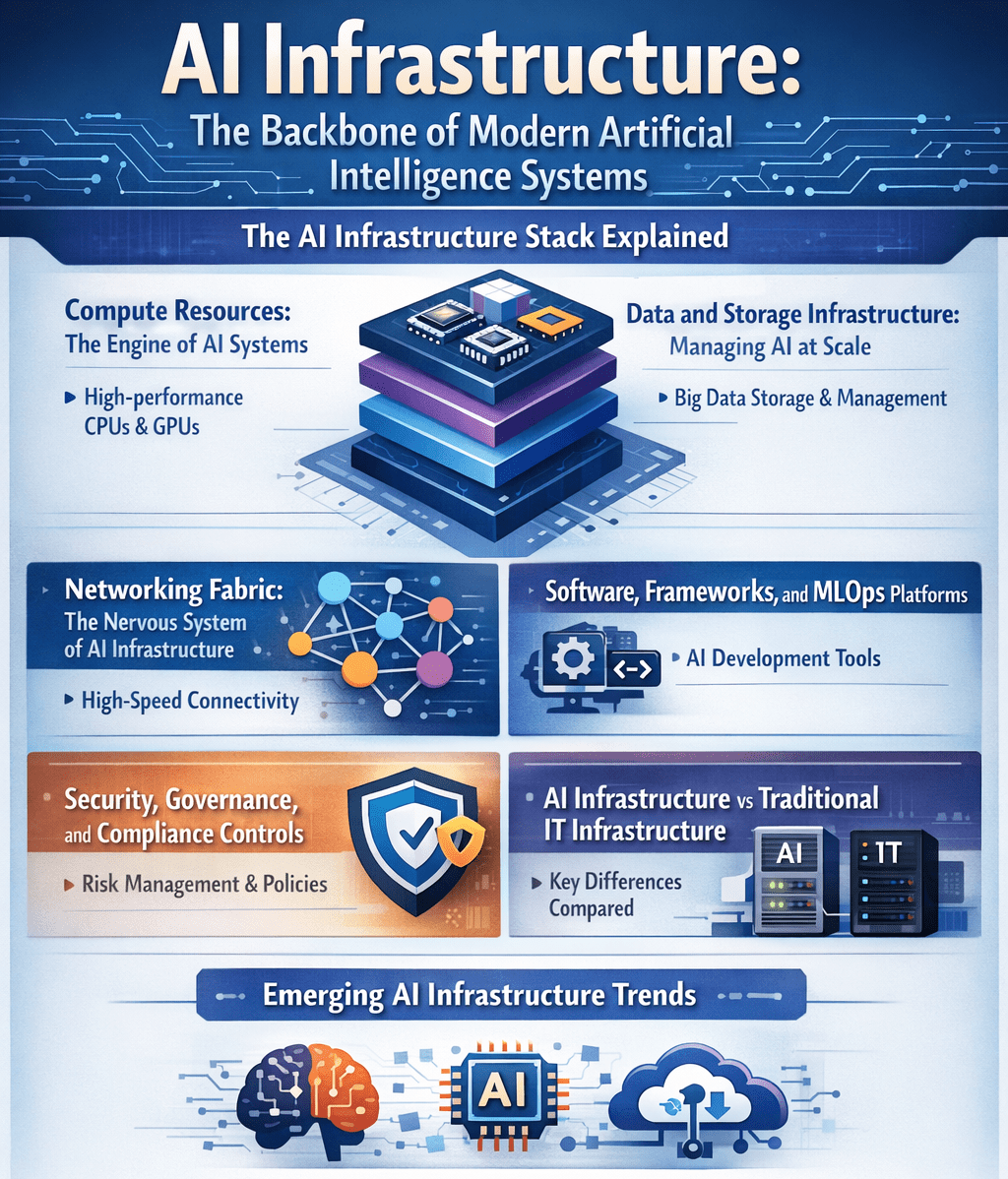

The AI Infrastructure Stack Explained

Modern AI infrastructure is often described as an “AI factory.” This model reflects how data, compute, and software operate as a continuous production system rather than isolated tools.

Each layer is interdependent. Weakness in one layer limits the value of the entire AI system.

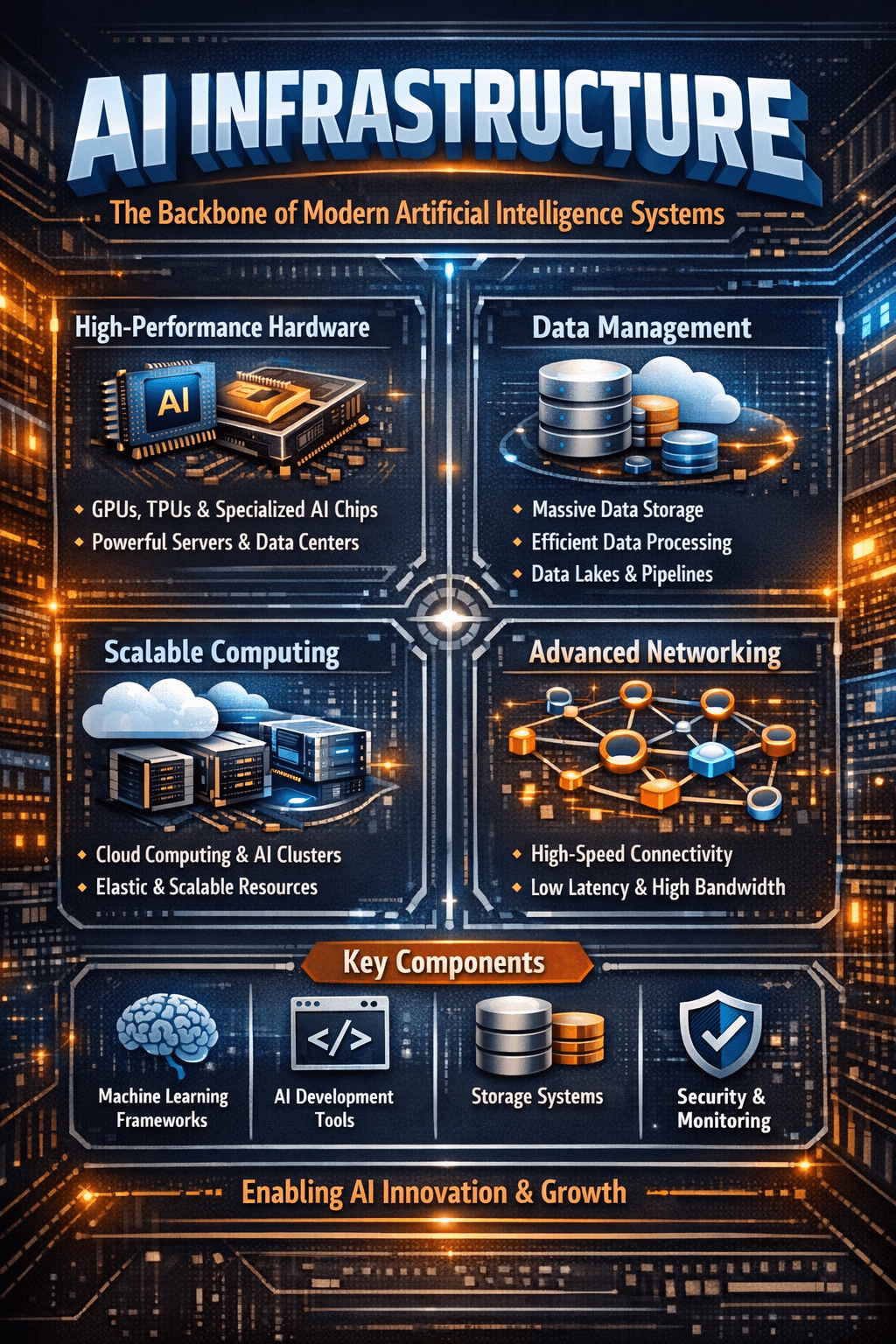

Compute Resources: The Engine of AI Systems

Compute infrastructure provides the processing power required for AI workloads. This includes CPUs for orchestration, GPUs for parallel computation, and specialized accelerators such as TPUs for deep learning.

AI models rely on matrix operations that must run simultaneously. GPUs and accelerators outperform traditional CPUs by handling thousands of operations in parallel. Without this capability, training times become impractical and inference costs rise sharply.

In production environments, insufficient compute results in delayed insights and unreliable AI outputs.

Data and Storage Infrastructure: Managing AI at Scale

Data infrastructure supports how data is collected, stored, processed, and retrieved. AI systems depend heavily on unstructured data such as text, images, audio, and video.

Scalable storage solutions include data lakes, distributed file systems, and increasingly, vector databases for semantic search and retrieval. These systems allow AI models to access large datasets efficiently while maintaining lineage and version control.

Strong data infrastructure improves model accuracy and reduces operational risk, especially in regulated industries.

Networking Fabric: The Nervous System of AI Infrastructure

High-performance networking connects compute and storage layers. AI infrastructure requires low-latency, high-bandwidth networks to prevent bottlenecks during training and inference.

Technologies such as InfiniBand and high-speed Ethernet enable rapid data movement across clusters. Without this capability, expensive compute resources sit idle while waiting for data.

Efficient networking directly improves training speed and infrastructure utilization.

Software, Frameworks, and MLOps Platforms

AI software frameworks simplify model development and deployment. Widely adopted tools such as TensorFlow and PyTorch provide standardized environments for building models.

MLOps platforms manage the full lifecycle of AI systems. This includes versioning, testing, deployment, and monitoring. Tools like MLflow and Kubeflow automate workflows and reduce operational errors.

Without MLOps, AI systems degrade silently, leading to unreliable decisions and loss of trust.

Security, Governance, and Compliance Controls

Security is a foundational element of AI infrastructure. It protects sensitive data, intellectual property, and model integrity.

Key controls include encryption, role-based access control, audit logging, and compliance frameworks. Governance defines who can train, deploy, and modify models, ensuring accountability.

Strong governance enables ethical AI use while supporting regulatory requirements.

AI Infrastructure vs Traditional IT Infrastructure

AI infrastructure differs fundamentally from traditional IT systems. Traditional infrastructure supports stable workloads such as email, databases, and internal applications.

AI infrastructure is designed for continuous experimentation, rapid scaling, and dynamic data. It often requires advanced cooling methods, including liquid or immersion cooling, due to higher energy density.

These differences explain why repurposing legacy infrastructure for AI frequently fails.

Emerging AI Infrastructure Trends

Several trends now shape enterprise AI infrastructure strategies. Sovereign AI and private cloud deployments address data residency and national security concerns. Energy-integrated data centers link AI workloads directly to clean power sources to manage demand.

Edge AI infrastructure is expanding, enabling real-time inference close to data sources and reducing cloud dependency.

Each trend reflects the growing maturity of AI infrastructure planning.

Conclusion

AI infrastructure defines what artificial intelligence can deliver in practice. It determines reliability, scalability, and trust. Organizations that treat AI infrastructure as a strategic asset gain long-term control over innovation and risk. Those that ignore it inherit limitations that compound over time. Evaluate your foundations carefully before expanding your AI ambitions.

Practical Recommendations

Assess workloads before scaling infrastructure. Separate experimentation from production systems. Invest in data governance early. Design hybrid architectures for flexibility. Review performance, cost, and energy use continuously.

AI infrastructure should evolve alongside models and regulations.

FAQs

What makes AI infrastructure different from traditional IT?

AI infrastructure is built for parallel processing, massive data volumes, and continuous model iteration.

Do all AI systems require GPUs or accelerators?

Not all, but most modern AI workloads benefit significantly from specialized compute.

Is AI infrastructure only relevant for large enterprises?

No. Scalable cloud and hybrid models make AI infrastructure accessible to smaller organizations as well.