Introduction

Introduction

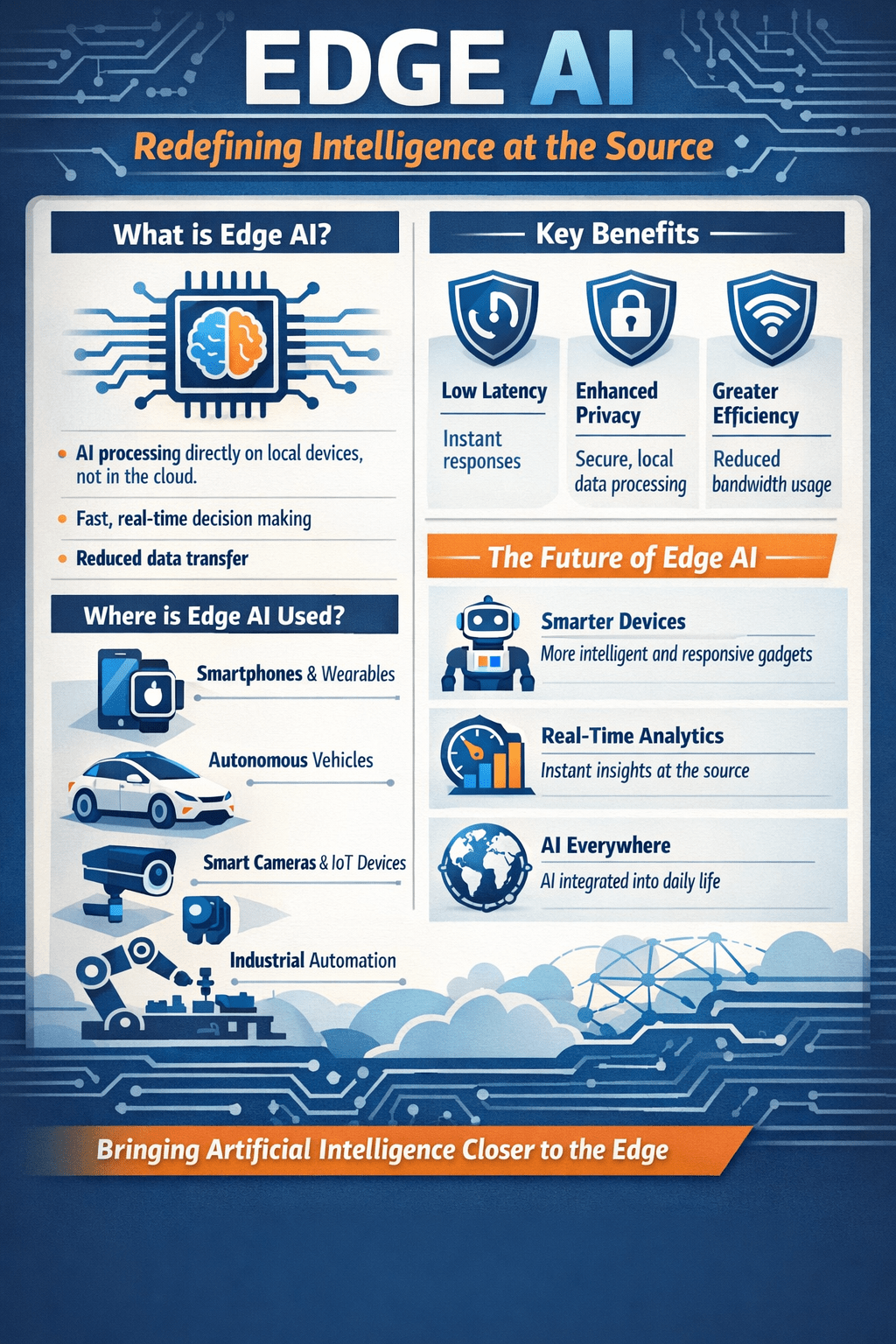

Edge AI is the implementation of artificial intelligence directly on local hardware devices, such as sensors, cameras, and machines, rather than in centralized cloud data centers. This shift enables Edge AI to deliver real-time analytics and immediate decision-making where data is created. As businesses move AI from pilot projects to full-scale production, the economic and practical limitations of cloud-only models are prompting a strategic rebalancing of computing resources.

Edge AI is a critical component of this new, hybrid infrastructure, delivering speed, privacy, and reliability that the cloud alone cannot provide.

The Core Mechanism: Localized Real-Time Inference

The primary function of Edge AI is to perform real-time inference on the device where data is generated. This process relies on a stack of specialized hardware, like AI accelerators, and software models optimized through techniques like quantization and pruning. These methods compress models to run within the strict power and memory constraints of edge devices. The mechanism eliminates the network round-trip to the cloud. This is why an autonomous vehicle can identify an obstacle and react in milliseconds, or a factory sensor can predict equipment failure without halting production to send data for analysis.

The Imperative Drivers: Economics, Latency, and Sovereignty

The adoption of Edge AI is driven by concrete business and technical imperatives. First, inference economics are forcing a rethink; while cloud API costs per query have fallen, total spending can spiral with high-volume, continuous use, making localized processing more economical for predictable workloads. Second, ultra-low latency is non-negotiable for applications like robotic surgery or autonomous systems, where cloud-based delays are physically intolerable. Third, data sovereignty and privacy regulations increasingly require data to be processed and stored within specific jurisdictions, a need best met by keeping sensitive information on-premises.

The Technical Enablers: Hardware and Model Optimization

Successful deployment hinges on two key enablers. First, purpose-built AI hardware, from microcontrollers to modules like the NVIDIA Jetson, provides the necessary performance-per-watt. Second, sophisticated model optimization is mandatory. Techniques such as quantization (reducing numerical precision) and pruning (removing unnecessary neural network parameters) are used to shrink powerful models so they can operate within the thermal and power limits of an edge device. Without this rigorous optimization, intelligence cannot be effectively embedded into the physical world.

Architectural Integration: The Hybrid and Agentic Future

Few systems are purely edge-based. Most enterprises adopt a hybrid edge-cloud architecture, where the edge handles immediate, time-sensitive inference, and the cloud manages model retraining and macro-analytics. This approach is evolving with agentic AI—systems where AI agents can autonomously perform multi-step tasks. In a hybrid model, an agentic system could use edge nodes for real-time perception and action (like monitoring industrial equipment) while coordinating longer-term strategy and reporting through the cloud. This creates a cohesive, intelligent layer across the entire organization.

Operational Realities: Security and Management Trade-offs

The trade-off for gaining speed and privacy is increased operational complexity. Security becomes a distributed challenge; each edge device is a potential network entry point and may be physically accessible, requiring measures like secure boot and hardware-based isolation. Furthermore, managing the lifecycle of AI models across thousands of disparate devices introduces significant overhead. How will your organization ensure consistent, secure updates to a fleet of ten thousand intelligent traffic cameras or medical sensors? This demands a robust edge MLOps strategy from the outset.

Transformative Applications across Industries

The applications are diverse and transformative. In manufacturing, Edge AI enables predictive maintenance and real-time visual inspection on the production line. In healthcare, wearable devices can monitor vital signs to detect medical events locally, ensuring patient privacy and immediate response. Autonomous systems, from vehicles to drones, rely entirely on edge processing for navigation and safety. Each case shares a common thread: the decision must be made where the physical event occurs, often under constraints of bandwidth, connectivity, or regulation.

Conclusion

Edge AI marks the maturation of artificial intelligence from a centralized service into an embedded, responsive capability. It is an architectural and strategic response to the physical, economic, and regulatory limits of purely cloud-centric computing. Success requires embracing new disciplines in hardware integration, model optimization, and distributed systems management. The outcome is intelligence that is not only powerful but also immediate, private, and resilient—fundamentally transforming how value is created at the point of action in the physical world.

FAQs

What is the main difference between AI training and inference in edge computing?

Training builds and refines the AI model, a computationally intensive process typically done in the cloud. Inference is the application of the trained model to new data, which is the primary task of Edge AI, optimized for speed and efficiency at the source.

Is Edge AI more secure than cloud AI?

It offers a different security profile. Data remains local, reducing interception risks during transmission. However, it expands the attack surface, as each distributed device must be individually secured against both physical and network threats, requiring a dedicated security strategy.

Can Edge AI systems learn and improve over time?

Individually, edge devices typically perform fixed inference. However, they can contribute to collective learning through frameworks like federated learning, where devices share model improvements without exchanging raw data, allowing the central cloud model to evolve while preserving privacy.