Introduction

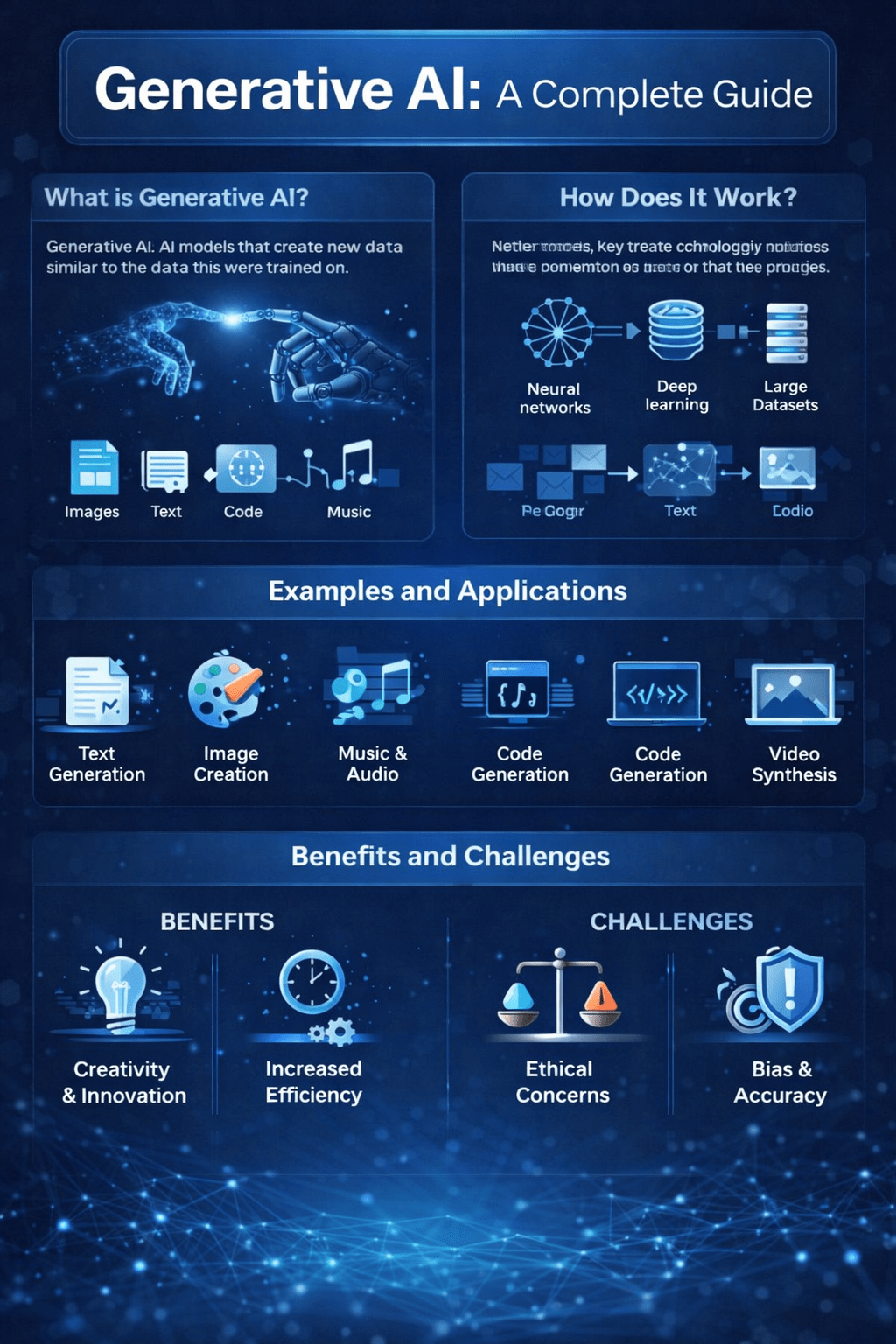

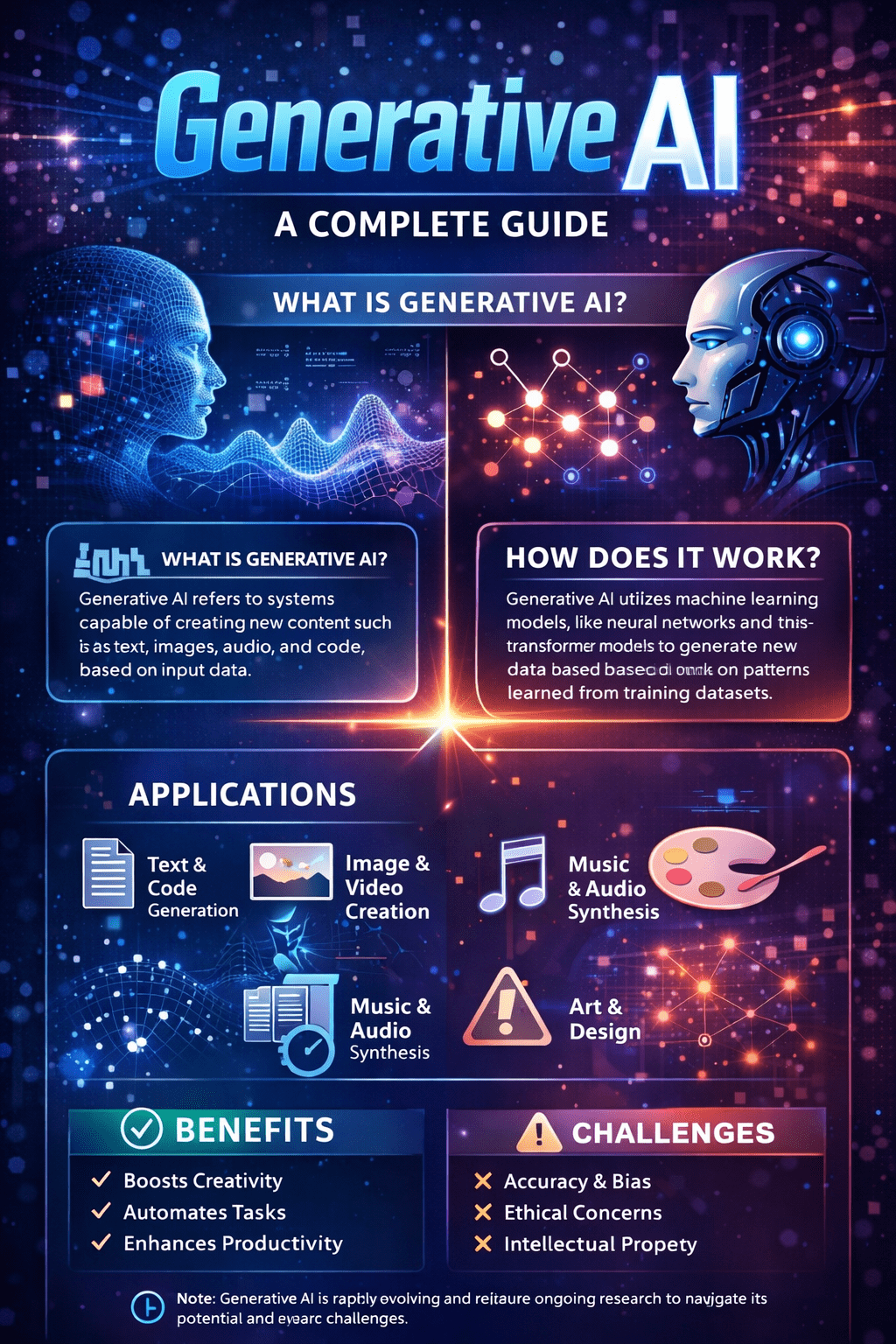

Generative AI is a powerful subset of artificial intelligence focused on creating new content from learned patterns, rather than simply classifying or interpreting existing information. Modern Generative AI systems can produce text, images, video, audio, and even synthetic data across varied domains. This represents a shift from traditional AI that typically predicts or categorizes; Generative AI seeks to generate original outputs based on input prompts and learned data structures. Tools such as ChatGPT, Midjourney, and Stable Diffusion exemplify how generative AI is reshaping creativity and productivity across industries.

Generative AI in Simple Terms

At its core, generative AI uses deep learning and large neural networks to understand patterns in massive datasets and create new content with similar characteristics. Unlike discriminative models that differentiate classes of data, generative models approximate the underlying distribution of the data to produce novel outputs.

This creative capability has grown in capability due to advances in architecture—especially transformers, which capture contextual relationships in data and have enabled large language models (LLMs) to generate coherent and contextually relevant text.

How Generative AI Works

Generative AI typically follows a multi‑step process:

- Training on Large Data—The model is trained on text, images, or other media to identify patterns.

- Pattern Learning—Deep learning architectures like transformers and neural nets encode relationships across data elements.

- Generation—Given a prompt, the model predicts and synthesizes new content that resembles the training set distribution.

- Refinement—Some models refine outputs iteratively for higher quality (e.g., diffusion models remove noise to create coherent images).

Generative models often require substantial compute resources and vast datasets during training but can be adapted to specific tasks with smaller fine‑tuning datasets, leveraging pre‑trained capabilities easier than training from scratch.

Core Types of Generative AI Models

Large Language Models (LLMs)

LLMs, such as OpenAI’s GPT series, are trained to generate text that is contextually relevant and human‑like. They achieve this by predicting the next word(s) in a sequence based on attention mechanisms. LLMs also power applications like chat interfaces, summarization tools, and code generation.

Generative Adversarial Networks (GANs)

Generative Adversarial Networks involve a generator and a discriminator working in opposition: the generator creates data, and the discriminator evaluates authenticity. Over time, this competition leads to highly realistic outputs such as synthetic images.

Diffusion Models

Diffusion models begin with random noise and iteratively refine it into structured outputs—particularly effective for detailed image generation. Models like Stable Diffusion use this approach to create high‑quality visuals from text prompts.

Variational Autoencoders (VAEs)

VAEs compress input into a latent space and reconstruct variations from it, enabling controlled generation and interpolation. Though less prominent than LLMs or diffusion models for large content tasks, VAEs contributed to early generative techniques.

Real‑World Applications

Generative AI is now widely used across domains:

- Text Generation – Automated writing, summaries, emails, and conversational agents.

- Image and Video Creation—Tools like Midjourney and Stable Diffusion generate unique visual content from prompts.

- Synthetic Data – Augmenting datasets for training machine learning models without compromising privacy.

- Healthcare and Science – Supporting drug design, medical imaging, and simulation of biological processes.

- Creative Industries – Art, music, and media production driven by AI‑generated assets.

These applications illustrate how generative AI enhances productivity, creativity, and problem‑solving by automating or augmenting tasks once limited to human creators.

Benefits and Challenges

The benefits of generative AI include automation of content creation, rapid prototyping of ideas, and expanded creative possibilities. It enables scaling of personalization in services, supports data privacy through synthetic data, and drives innovation in research and design.

However, challenges remain. Models can generate inaccurate or biased outputs due to limitations of training data and architecture. Techniques such as GANs may suffer from issues like mode collapse, where the model produces limited variation in outputs. Ethical concerns, including intellectual property, misinformation, and deepfake misuse, demand careful governance and human oversight to ensure responsible deployment.

Conclusion

Generative AI represents a fundamental evolution in how machines interact with information: from pattern recognition to creative synthesis. With advances in transformers, diffusion techniques, and adversarial training, these models are no longer limited to research labs—tools based on generative AI are ubiquitous in consumer and enterprise applications. By embracing generative AI responsibly, organizations can unlock powerful new pathways in creativity, automation, and problem-solving while remaining mindful of ethical and technical challenges.

FAQs

What distinguishes generative AI from traditional AI?

Generative AI creates new content based on learned patterns, while traditional AI focuses on predictions or classifications from existing data.

Why are tools like ChatGPT so popular?

Tools like ChatGPT dominate due to ease of interaction and broad usefulness in generating text, answers, and ideas.

Can generative AI be biased or inaccurate?

Yes—models can reflect biases in training data or produce implausible outputs, so human review remains essential.